A self-driving car seems like some amazing stuff in a science fiction movie. Thankfully, today’s technology revolution makes it possible to own or experience such vehicles. Recently, Baidu launched a new model Apollo RT6, a robotaxi/self-driving cab in China. Nearly one million rides have already been taken in China via robotaxis in almost ten cities. However, this new model is supposed to be the next big thing in the car market.

It is the latest AI trend in the automotive industry. These driverless vehicles employ digital technologies such as artificial intelligence, machine learning, etc., and run without human interference. Let’s dig deep into this concept.

What is a self-driving car?

These cars solely operate by themselves without any human input. The systems consist of sensors, actuators, software, servers, power supplies, and automated controls. These components comprise the following crucial functions:

- Sensor: Collects data related to speed, direction, acceleration, and possible roadblocks.

- Inertial navigation system: Detects real-time location of the vehicle

- Car-navigation system: Provides information about latitude and longitude using GPS (global positioning system)

- Electronic map: Contains data about road provisions and congestion

- Light detection and ranging (LIDAR): Escapes crashes and emergency braking occurrences.

Other components include the vehicle body, camera, infotainment, power steering, and automated driver assistance system (ADAS).

Different types of sensors used in the self-driving car

- RADAR

Radio detection and ranging sensors (RADAR) are crucial to knowing speed and position. During radio transmissions, radio waves strike obstacles and reflect signals. These signals are analyzed by an antenna. Typically, the frequency-modulated continuous wave (FMCW) radar is used in most driverless cars. Usually, these sensors are fitted behind bumpers or company logos. With Tesla Autopilot, accidents are detected seconds before they occur, thanks to RADAR.

- LIDAR

LIDAR compensates for weaknesses observed in RADAR. Every second, LiDAR emits 100,000 laser pulses and calculates their data. It creates 3D models of objects in its environment by using these data points to form a point cloud. LiDAR recognizes things (i.e., cars, pedestrians) and forecasts their movements to decide how the vehicle will respond. Several self-driving taxis, including Google Car and Uber, utilize LIDAR.

- Ultrasonics

Sound detection and ranging (SONAR) is vital for parking and tracking obstacles nearby. It emits sound waves between 40 kHz and 70 kHz. There are 12 ultrasonic sensors on Tesla’s Model S and X that witness blocks at the close field.

- Cameras

The self-driving car senses the outside surrounding using cameras. There are two camera classes: infrared (IR) and visible (VIS). IR camera is not affected by climate and lighting conditions. They are highly sensitive to noticing living things like pedestrians and animals. These cameras are further categorized into NIR (near infrared) and MIR (mid-infrared).

VIS catches wavelengths just like human eyes (400 to 780 nm). The mixture of two VIS cameras at a predetermined span allows stereo vision; thus, a 3D illustration of the scene around the vehicle can be produced. This camera has several benefits like high resolution, the ability to distinguish between colors, and a low price.

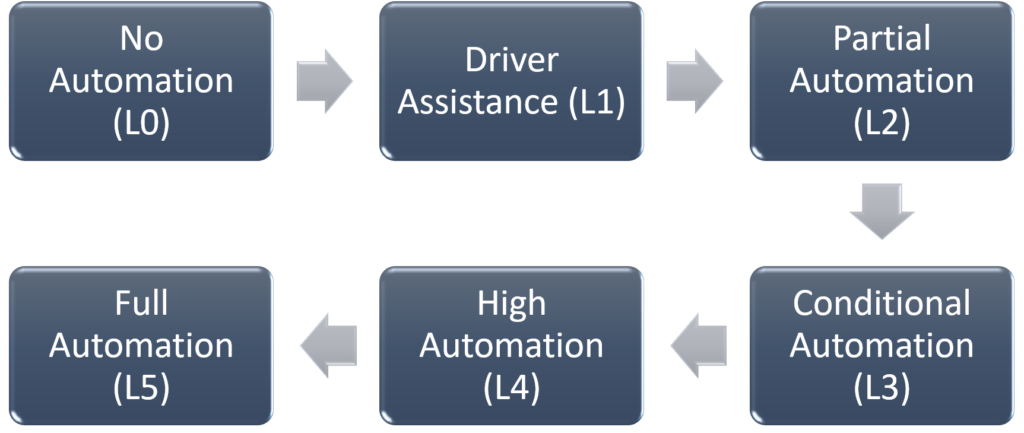

Levels of automation

The Society of Automotive Engineers (SAE) has defined 5 levels of automation in self-driving vehicles.

Level Zero

There is no automation at this level. A driver is responsible for operating and monitoring the vehicle. Even though the system provides warnings or alerts, the driver has to remain focused all the time.

Level One

In this case, the driver is solely accountable for driving, but he gets some assistance with steering, braking, or accelerating. To mention a few examples:

- Adaptive cruise control: Regulates speed

- Lane-keeping assistance: Guides the vehicle into a particular lane

Level Two

This stage is partial automation, where the system offers continuous assistance for acceleration, braking, and steering. A driver has to monitor these functions and should take control whenever necessary. The Highway pilot is an example of partial automation.

Level Three

This level focuses on conditional automation. When self-driving is limited, it maintains full control over the vehicle and warns the driver when it needs help. RADAR technology is commonly used for sensing surroundings in level 3 automation. Level 3 is used in General Motors’ super cruise technology, Tesla autopilot systems, and Audi A8 cars.

Level Four

This level comes under high automation. Vehicles can react instantly to emergencies. Drivers are neither required to pay attention nor have to be in the driver’s seat. They can, however, drive the vehicle under extreme weather conditions. Google car prototypes are a popular example of high automation.

Level Five

This level has full automation without the need for human involvement. Robotaxis are fully automated vehicles. They are fully capable of receiving outer(field) and inner(machine) details from the vehicle’s information technology.

Famous self-driving cars

Cruise

Cruise is integrating ride-sharing with self-driving car technology to boost driverless transportation. Cars are all-electric with zero emissions. Using cutting-edge AI and robotic technology, the company’s automotive products undergo stringent simulation testing before introducing to the market. This will ensure compliance with all relevant road laws. The cruise’s lifespan is six times longer than the average car, thanks to its modular design and lifespan of 1 million miles.

The cruise begins mapping the roads of Dubai ahead of the launch of the robotaxi in 2023.

Read more, about how to build robots using two fundamental elements.

Tesla

Tesla is already a giant in the electric vehicle market. Tesla’s Autopilot is conditional automation with easy navigation within a lane and in a more complex zone. Autopilot features are updated regularly to make it smarter. It will be interesting to witness a full automation capability in the coming years.

Waymo

Waymo is a subsidiary of Alphabet Inc. The autonomous cars of this company have traveled a distance of 20 million miles and are on the verge of achieving level 5. Waymo’s taxi service is currently functional in Phoenix. The sensors fitted in these cars have enabled the vision of 360° day and night. They generate a 3D image of the atmosphere showing stationary and moving objects.

Waymo, Alphabet Inc., and Uber have partnered to deploy driverless trucks.

Argo AI

Agro is progressing toward level 4 automation cars for supplying goods and ride-sharing. They are creating a fully-integrated system using sensors, LIDAR, RADAR, and cameras. Argo AI is using machine learning to overcome a few difficulties like decision-making and perception. They have started operations in Miami and Austin.

Pony.ai

Pony.ai is intended to manufacture smart cars that will manage and control driving in complex situations found in suburbs. It offers an AI-based experience for improving self-driving cars. Pony.ai believes that self-driving cars can be marketed more efficiently through a practical engineering strategy.

Like Baidu, Pony.ai has also been granted a permit to operate driverless taxis in China.

Challenges involved in self-driving cars

Great revolutions often come with the biggest challenges!

Assessment and verification

Self-driving cars require stringent testing as any failure will directly affect human lives. There are several authorized standards for vehicle design, safety, service, and maintenance. ISO 26262 compliance focuses on automotive functional safety. But self-driving cars go way beyond conventional testing. They require a specific approach to excel in analysis, perception, decision-making skills, etc. These things can be confirmed with a large database from machine learning software. As a result, machine learning algorithms have to deal with a challenging situation.

Failure-resilient systems also pose an issue, since they require reliable, separate, redundant subsystems so that in case of damage, one can be replaced by another.

Reliability and security

Safety and trust often go hand in hand. To ensure reliability, a driverless car must travel around 291 million miles without causing an accident. This requirement seems high enough to cause issues in deployment. Another factor is to safely drive through events of lane changing, left turns, corners, zigzag motion, detecting dark objects during the night, etc. Self-driving cars should be intelligent enough to deal with such situations.

Lack of trust is a huge factor in determining the rate of deployment of these cars. It was reported in March 2018 that a Level-4 Uber prototype had struck a pedestrian crossing a road.

Legal objections

Automobile companies that create self-driving cars are inherently responsible for accidents caused if any. Thus, rules and regulations must be modified to consider these vehicles when driving on public roads. The policy must address the concerns of potential consumers clearly and concisely.

Financial burdens

The evolution and adoption of driverless vehicles require high costs. Sensors, communication devices, and automation will only be available in premium-tier production cars. This will increase the overall cost for the end user. Robotaxis and ride-sharing are one of the few options to tackle this problem. According to a study on the costs associated with Robotaxis implementing the ridesharing model, high fares are primarily the result of their high utilization rate, which is the amount of time they spend transporting passengers.

The progress made by self-driving car companies is impressive, but the technology is still a few years away from being fully developed and deployed.

[…] AI: It can resolve problems and discover answers without human interference. Self-driving cars, for example, do not run into obstacles, obey traffic rules, and drive […]

[…] at an elevated rate. It is expected to be the communication hub that connects virtual reality, autonomous vehicles, IoT, and many other emerging […]

[…] example, consider self-driving cars that drive smoothly, cross obstructions, and pay attention to traffic signals without human […]

[…] range from cleaning the floor at home to space exploration. UGVs (unmanned ground vehicles) are self-driving cars that are capable of driving on roads and highways. NASA’s planetary rovers are a popular example […]

[…] Autonomous vehicles will be capable of operating and navigating themselves without human input. […]

[…] give real-time data on traffic updates, road disruptions, accidents, etc. Intelligent vehicles like Self-driving cars can provide numerous datasets generated from installed […]

[…] autonomous vehicles or self-driving cars use LiDAR, they perceive atmospheric data in real time and produce 3D graphics. As part of its […]

[…] such as smartphones or smart watches. Furthermore, it could also be used to provide power for autonomous cars, drones, robotics systems, or even distributed computing […]